Terraforming AWS: a serverless website backend, part 1

What if you could define all the infrastructure for your cloud application using code or text, apply your design and changes automatically, and then collaborate with your team in source control?

That’s the promise of Terraform, an infrastructure-as-code tool from HashiCorp

Introduction

Rob Sherling wrote a great article on how to build a website backend on serverless infrastructure. This article will take you through the first stages of constructing Rob’s infrastructure design using Terraform, and explain some Terraform concepts and rules along the way. Thanks in advance to Rob, who allowed me to write this article on top of his code and design!

This article, like Rob’s original, assumes that:

- You have an AWS account, know how to use it, and are comfortable with some small charges (though this generally fits into the free tier).

- You have the AWS CLI installed and configured.

- You have a domain name you can use for this project.

- You’re comfortable with basic Javascript - don’t worry, we will just be modifying some existing code.

- You have at least heard of the following (if not, read a bit on the ones you don’t know):

- AWS Lambda

- API Gateway

- CloudWatch

- DynamoDB

- KMS

- IAM

- The mail service Mailgun

- The API tool Postman

You might need more later on, but if you have the above you can build something today!

We’re going to make use of all these different services, and some application code to build an entire application. If you would prefer to just learn the basics by starting up some VMs on EC2, you may prefer David Schmitz’s article on Using Terraform for Cloud Deployments.

So what is Terraform?

Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently. Terraform can manage existing and popular service providers as well as custom in-house solutions.

Configuration files describe to Terraform the components needed to run a single application or your entire datacenter. Terraform generates an execution plan describing what it will do to reach the desired state, and then executes it to build the described infrastructure. As the configuration changes, Terraform is able to determine what changed and create incremental execution plans which can be applied.

In other words, you describe your application infrastructure in human readable config/code, and Terraform handles all the detail of how to make that happen at your cloud provider.

Both Terraform and CloudFormation allow you to achieve similar results at AWS, but Terraform supports many more cloud providers than just AWS, as well as letting you work with scenarios like multiple AWS accounts, or composing different cloud providers into one deployed application.

What are we actually building?

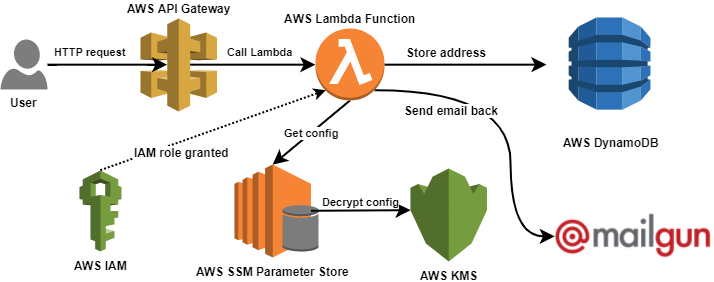

We are going to make the backend for a website to be used in a marketing campaign - it will gather Twitter handles and email addresses for potential customers, and allow you to send them messages. To be hip and modern, we’re going to make the backend ‘serverless‘ by using entirely AWS cloud services (mainly Lambda and DynamoDB) to reduce costs and maintenance.

In part 1 of this article series, we will just tackle the initial email capture and storage.

Here’s how the main pieces plug together:

What do I need?

You’ll need a few things to complete the whole series, but for part 1, all you need is the following:

Terraform

Download it, and place it in your PATH.

Postman

Install it. We’ll use this to test your backend API.

Mailgun account

Sign up for a free account. We’ll use this to send the emails. Don’t link your domain yet (if you did already, delete it). Make sure you set up some authorized recipients to use while testing.

Node.js

Install it. The infrastructure we build will also support Python, Java and C# (.NET Core), but the example code in this article series is all Node.js.

Optional: Text editor plugins

If you’ve graduated to something more advanced than Notepad (and you really should!), installing syntax highlighting and editor settings can make a big difference to your day:

- Atom syntax highlighting

- VS Code syntax highlighting, linting, formatting and validation

- Sublime Text syntax highlighting and code snippets

Getting started

As we go along I will introduce various Terraform and AWS concepts, and link to their documentation. You can lay Terraform files out in whatever order you like - I’ve only chosen this order to slowly introduce new concepts.

Setup

Create a new directory for this project, and a new text file in there called terraform.tf.

Before we get started with setting up infrastructure in AWS, we need to tell the AWS provider built into Terraform how to talk to the AWS API. All we need to cover for now is the AWS region - the provider will pick up the rest of your credentials from the AWS CLI you already configured. Don’t worry too much about the variable, we’ll cover that later.

variable "region" {

default = "eu-west-1"

}

provider "aws" {

region = "${var.region}"

}

You’ll notice the code looks pretty much like config files you’re used to, eg JSON or YML. You can set the region value to your nearest if you wish.

After providers, Terraform is built around the concept of resources. Generally these correspond to ‘things’ on offer at your cloud provider, such as virtual machines or servers, databases, stored files, etc. Sometimes they actually refer to links or associations between two resources, or other pieces of configuration.

Database

We will start with the DynamoDB table - this has a 1:1 relationship with a Terraform resource. Add this into your terraform.tf:

resource "aws_dynamodb_table" "emails" {

name = "emails"

read_capacity = 2

write_capacity = 2

hash_key = "email"

attribute {

name = "email"

type = "S"

}

}

Let’s break this down:

aws_dynamodb_tableis the resource provided by the AWS provider.- The first

emailsis the name for this resource - but in Terraform only. You can be as generic or descriptive as you like, but like in any software development, it’s good practice to be able to understand what something is by just reading the name. - The second

emailsis the actual DynamoDB table name - you’ll see this in the AWS console and refer to it in code. - The rest of the arguments are specific to the resource - check out the docs if you want to know more.

Init

Terraform is based on a pluggable design - the components that actually talk to cloud providers and create resources are downloaded automatically and stored in your project directory. So before you can call AWS, you need to tell Terraform to download the AWS provider:

λ terraform init

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

- Downloading plugin for provider "aws" (1.11.0)...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.aws: version = "~> 1.11"

Plan and apply

The next step is to let Terraform build a plan of the changes you’ve requested. Go ahead and run terraform plan - the AWS provider in Terraform will look at your current AWS setup and plan out what it needs to do to deploy your infrastructure. It should look something like this:

λ terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_dynamodb_table.emails

id: <computed>

arn: <computed>

attribute.#: "1"

attribute.3226637473.name: "email"

attribute.3226637473.type: "S"

hash_key: "email"

name: "emails"

read_capacity: "2"

server_side_encryption.#: <computed>

stream_arn: <computed>

stream_label: <computed>

stream_view_type: <computed>

write_capacity: "2"

Plan: 1 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

What does this show?:

- Your new resource,

aws_dynamodb_table.emailsis shown, with all of its properties. As+indicates, this is a resource due for creation. - The values marked

<computed>will be assigned by AWS when the table is created. Remember this is only Terraform’s plan of action, so these don’t exist yet.

By the way - if you have anything else already setup in your AWS account you will notice it does not appear here. You can mix manually managed resources with Terraform resources safely, and even multiple Terraform projects.

At this point you can go ahead with terraform apply, and answer yes where requested:

λ terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ aws_dynamodb_table.emails

id: <computed>

arn: <computed>

attribute.#: "1"

attribute.3226637473.name: "email"

attribute.3226637473.type: "S"

hash_key: "email"

name: "emails"

read_capacity: "2"

server_side_encryption.#: <computed>

stream_arn: <computed>

stream_label: <computed>

stream_view_type: <computed>

write_capacity: "2"

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_dynamodb_table.emails: Creating...

arn: "" => "<computed>"

attribute.#: "" => "1"

attribute.3226637473.name: "" => "email"

attribute.3226637473.type: "" => "S"

hash_key: "" => "email"

name: "" => "emails"

read_capacity: "" => "2"

server_side_encryption.#: "" => "<computed>"

stream_arn: "" => "<computed>"

stream_label: "" => "<computed>"

stream_view_type: "" => "<computed>"

write_capacity: "" => "2"

aws_dynamodb_table.emails: Still creating... (10s elapsed)

aws_dynamodb_table.emails: Creation complete after 14s (ID: emails)

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

If you get tired of typing yes, you can always add a -auto-approve flag to skip confirmation.

You might have noticed that the table was provisioned with a throughput capacity of 2. That’s probably overkill for this project, so edit terraform.tf to make that 1, and terraform plan.

~ aws_dynamodb_table.emails

read_capacity: "2" => "1"

write_capacity: "2" => "1"

This time around you can see that the table is due for a change (marked with ~) - Terraform won’t destroy and recreate it. Arguments changed will be shown underneath. At this point, terraform apply should be self explanatory:

aws_dynamodb_table.emails: Modifying... (ID: emails)

read_capacity: "2" => "1"

write_capacity: "2" => "1"

If you run terraform state list, you will see a list of everything that Terraform manages in your AWS account. You can check terraform state show aws_dynamodb_table.emails to get the details of a specific resource.

Permissions and security

IAM

We’ll be running the backend server logic in a Lambda function, but before we build that we need to setup an IAM role and attach some policies to it. This will give our function permissions to access other AWS services.

data "aws_caller_identity" "current" {}

resource "aws_iam_role" "LambdaBackend_master_lambda" {

name = "LambdaBackend_master_lambda"

path = "/"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "LambdaBackend_master_lambda_AmazonDynamoDBFullAccess" {

role = "${aws_iam_role.LambdaBackend_master_lambda.name}"

policy_arn = "arn:aws:iam::aws:policy/AmazonDynamoDBFullAccess"

}

resource "aws_iam_role_policy_attachment" "LambdaBackend_master_lambda_CloudWatchFullAccess" {

role = "${aws_iam_role.LambdaBackend_master_lambda.name}"

policy_arn = "arn:aws:iam::aws:policy/CloudWatchFullAccess"

}

resource "aws_iam_role_policy" "ssm_read" {

name = "ssm_read"

role = "${aws_iam_role.LambdaBackend_master_lambda.id}"

policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:DescribeParameters"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ssm:GetParameters"

],

"Resource": "arn:aws:ssm:${var.region}:${data.aws_caller_identity.current.account_id}:parameter/${terraform.env}/*"

}

]

}

POLICY

}

There’s some new syntax going on here, so let’s explain:

- The

<<syntax allows you to include a multi-line string or document. In this case we’re using it for the IAM policy document. After<<we havePOLICY, telling Terraform to read from that point until the nextPOLICYmarker.- The name

POLICYisn’t special, anything will do. - If you’ve ever scripted a Unix terminal, this may sound familiar.

- As you may have guessed, anything inside the angle brackets isn’t for Terraform, so if you don’t understand what it means, don’t worry for now.

- The name

- If you don’t want to keep your AWS policy documents in your Terraform code, check out the

file()built-in function to load them from disk. - The

roleargument relies on Terraform interpolation. This is a fancy way of saying it allows you to get values from elsewhere, whether that is another resource, or some static configuration.- If you’re used to an OO programming language, think of this like accessing class members.

- All we’re really doing here is making sure the policy attachment works, no matter how you name the

aws_iam_role. This is an example of Don’t Repeat Yourself, a good practice in any language.

- Don’t worry about the

dataline for now - we’ll cover that in part two.

When you’re happy, go ahead and terraform apply this, and carry on to the next part.